ALKS – Consumer Confidence Safety Framework

Tom Leggett is the Lead Research Engineer for Automated Driving at Thatcham Research. This is the final blog in a series providing the behind the scenes insights into the work developing a world-first consumer rating for Automated Driving Systems.

This blog will summarise the work in creating a Consumer Safety Confidence Rating Framework for automated vehicle technologies such as Automated Lane Keeping Systems (ALKS). The project aimed at ensuring the full societal benefits of such systems can be fully achieved through widespread consumer adoption.

As mentioned in previous posts, which can all be found here, we recognise the important role that virtual testing will play in thoroughly exercising systems in a safe environment in order to provide confidence in the technology. The complex nature of ALKS and other automated systems require a uniquely broad approach to testing, and virtual testing can provide part of the solution, if used properly.

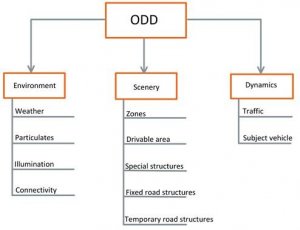

The key philosophy of the ALKS Consumer Safety Confidence Framework is that the safety rating of the vehicle under test must be a function of its Operational Design Domain (ODD). Where the ODD represents the operating environment within which ALKS can perform the driving task. This could be a geographical operational limit such as motorways, or a functional one such as a maximum speed of 60 km/h.

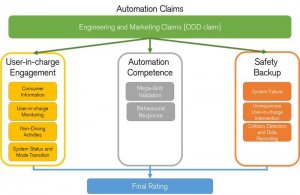

The framework begins with the Automation Claims, where the driving domain of the vehicle, as provided by the carmaker, is compared against various assessment criteria. This ODD “checklist” will inform which tests are to be carried out virtually and validated physically. In addition to the checklist, further information such as marketing media will be procured and assessed. In this way, we can ensure the advertising claims made regarding the technology match the actual measured performance.

It is proposed that virtual tests are executed by the vehicle manufacturer, who is provided with the scenarios to test, using an opensource format Scenario Description Language. Upon the generation and formatting of scenarios, they are then stored within the SafetyPoolTM scenario database, where they can be easily assessed by all relevant groups.

However, as stressed previously, the results of the virtual tests must then be spot-checked using independent physical testing, performed at CAM Testbed UK sites. The method by which the select verification tests are chosen is not fully defined within this framework, but it will involve a combination of random selection, edge case identification, failure point validation and other specifically recognised scenarios. This allows us to comprehensively review the claimed performance against the measured performance, which informs the overall rating of the automated vehicle.

Vehicle manufacturers should be rewarded for providing not only a wide-ranging ODD, but also an accurately described one with effective performance within it. This balance between claimed capability and the measured assessment performance will feed into the three sections: User-in-Charge Engagement, Automation Competence and Safety Backup. These sections will in turn generate the overall rating.

To find out more about the Framework, including the three sections within it, download the complete framework document here. If you would like to have a conversation with me directly about anything mentioned in this blog series, please contact me at tom.leggett@thatcham.org.

Thank you for joining me along this journey towards safe automation.